I’m testing Clever AI Humanizer to make my AI-generated text sound more natural, but I’m not sure if it actually works well in real-world use. Some outputs still feel robotic and I’m worried about detection tools flagging my content. Can anyone share honest experiences, pros and cons, and tips for getting the most human-sounding results with this tool?

Clever AI Humanizer: My Actual Experience & Test Results

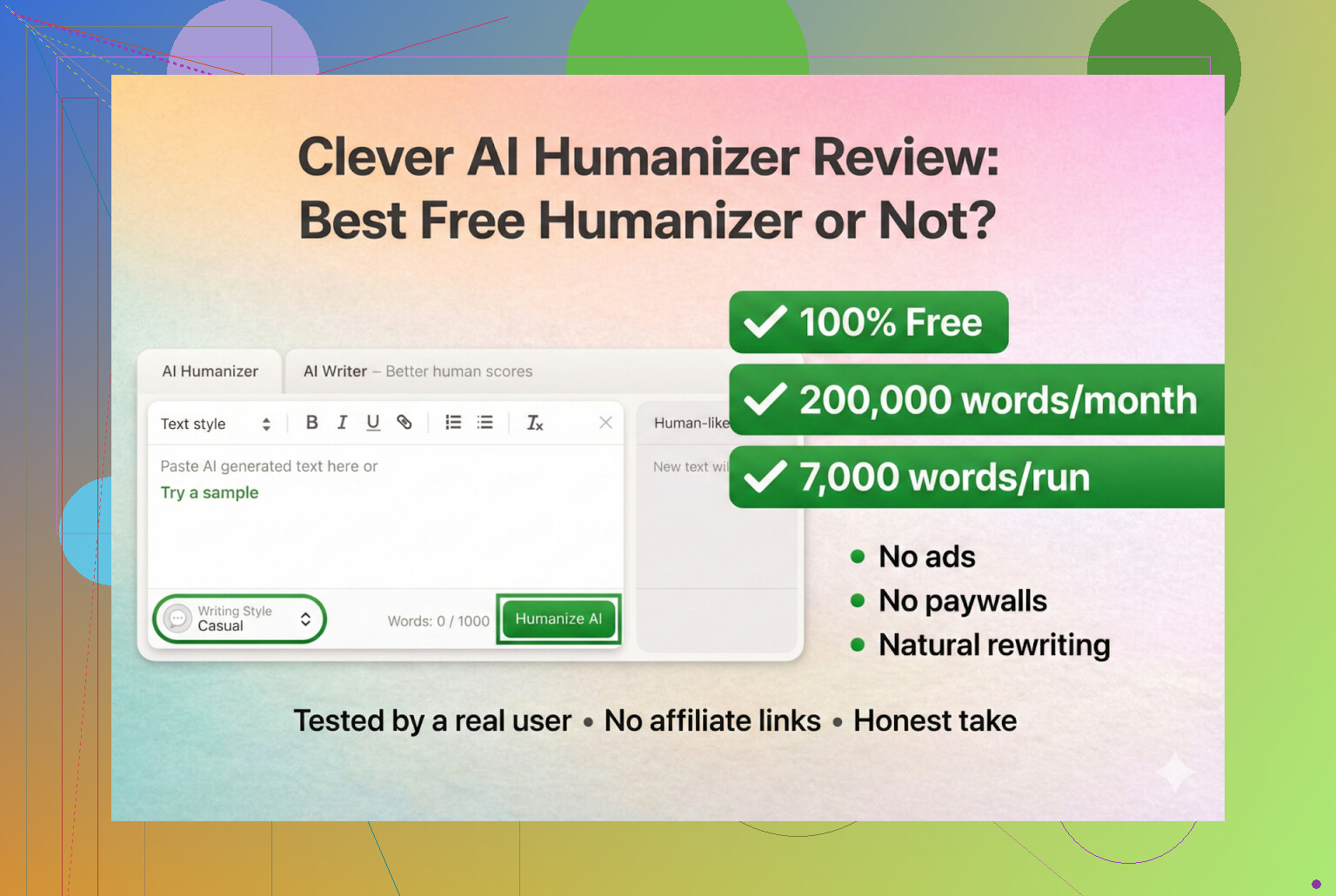

I went down a rabbit hole with AI “humanizers” recently, but I wanted to start with stuff that is completely free. First stop: Clever AI Humanizer.

Site is here, and as far as I can tell this is the real one:

https://aihumanizer.net/

I’m spelling that out clearly because I’ve already seen people get burned by clones and random “humanizer” tools bidding on the same brand name in Google Ads. They click an ad, land on some totally different site, suddenly they are stuck in a paid subscription they never wanted.

As of right now, Clever AI Humanizer itself doesn’t have any premium plan. No upsell screen, no “upgrade to pro,” no sneaky subscriptions. If a “Clever Humanizer” is asking for your card, you are almost certainly not on the legit site.

How I Tested It

I didn’t want a soft test like rewriting my own messy notes. I went full AI-on-AI.

- Asked ChatGPT 5.2 to write an entirely AI-generated article about Clever AI Humanizer.

- Took that raw AI output and fed it into Clever AI Humanizer.

- Chose the Simple Academic mode in the humanizer.

- Ran the result through several detectors.

- Then asked ChatGPT 5.2 to critique the final text for quality.

Why Simple Academic? Because that style is weirdly hard for a lot of “humanizers” to pull off. Fully academic triggers detectors, fully casual can sound synthetic. This one sits in the middle: slightly academic wording but not full-blown research paper. My guess is that this hybrid style helps keep detection scores lower while still being readable.

Detector Tests: Does It Actually Pass?

ZeroGPT

I have mixed feelings about ZeroGPT. It once called the U.S. Constitution “100% AI,” which honestly tells you a lot about its reliability. That said, it is still one of the most popular detectors and ranks high on Google, so people are using it whether we like it or not.

On the Clever AI Humanizer output, ZeroGPT reported:

0% AI

So: detected as fully human.

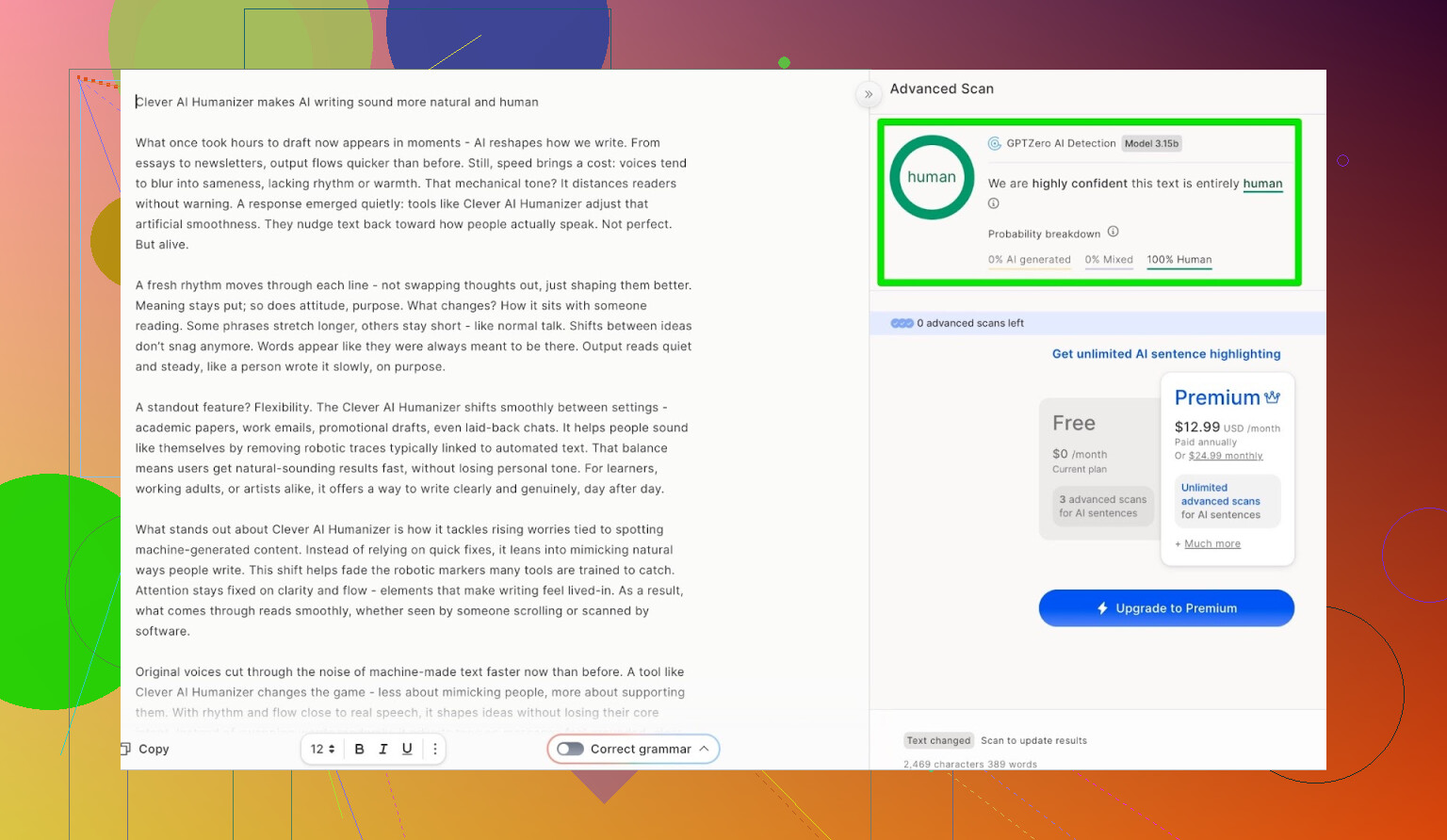

GPTZero

Next I ran the same text through GPTZero, which is another big-name checker.

Result:

100% human, 0% AI.

Again, basically a perfect pass.

So in terms of raw detection scores on the first test, it crushed the two most-used detectors.

But Is The Text Any Good?

There is no point in “passing” detectors if the output reads like a scrambled textbook or looks like it was translated three times.

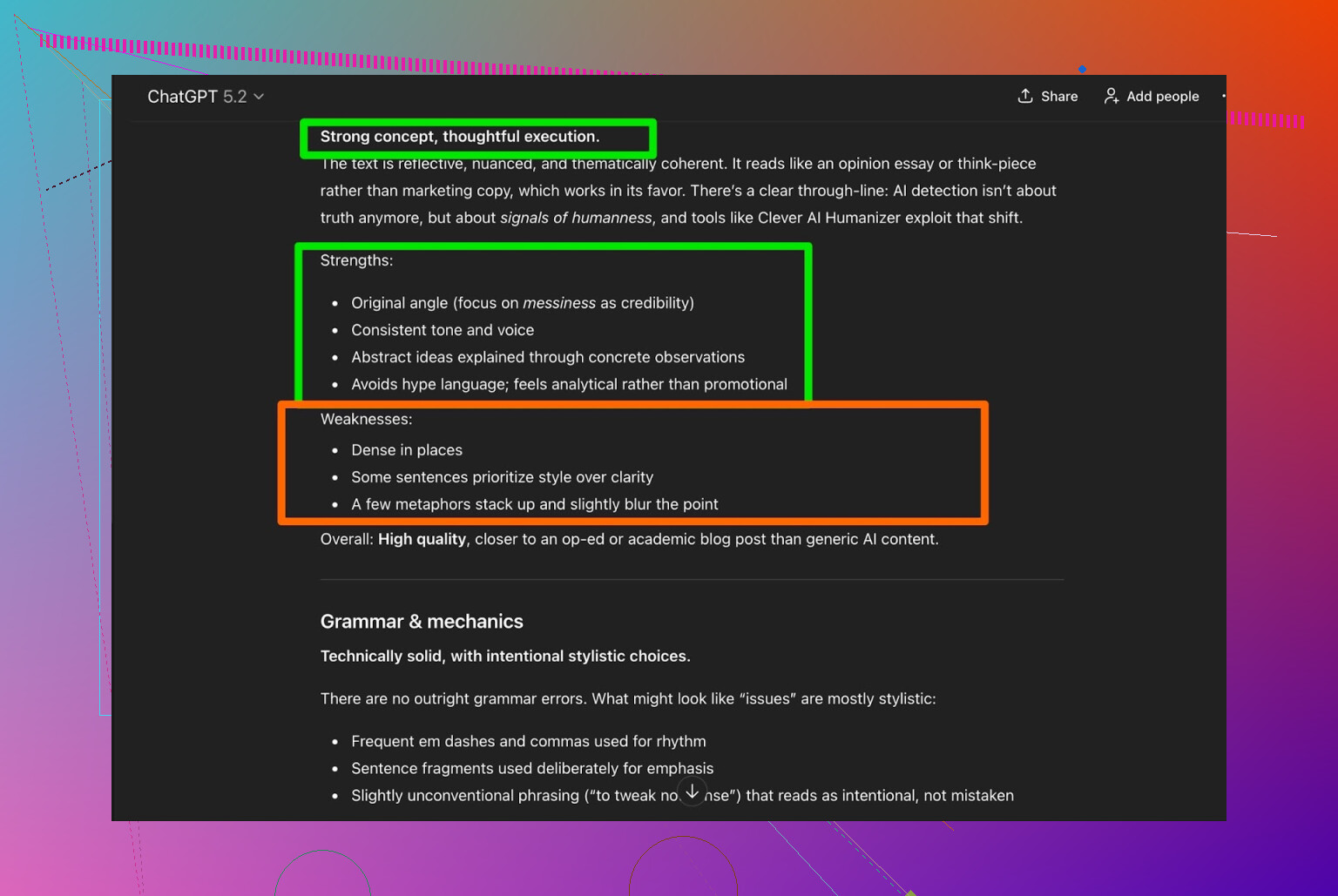

So I sent the final Clever AI Humanizer version back to ChatGPT 5.2 and asked it to judge:

- Grammar

- Coherence

- Style consistency

- Whether a human editor would still be needed

Verdict from ChatGPT 5.2:

- Grammar: solid

- Style: fits Simple Academic, but

- It still recommended a human review before publishing

Honestly, that sounds right to me. Any tool that promises “no editing needed” is just selling fantasy. With any AI paraphraser, humanizer, writer, whatever, you still need to:

- Fix tone for your specific audience

- Remove weird phrasing

- Check facts

- Adjust structure

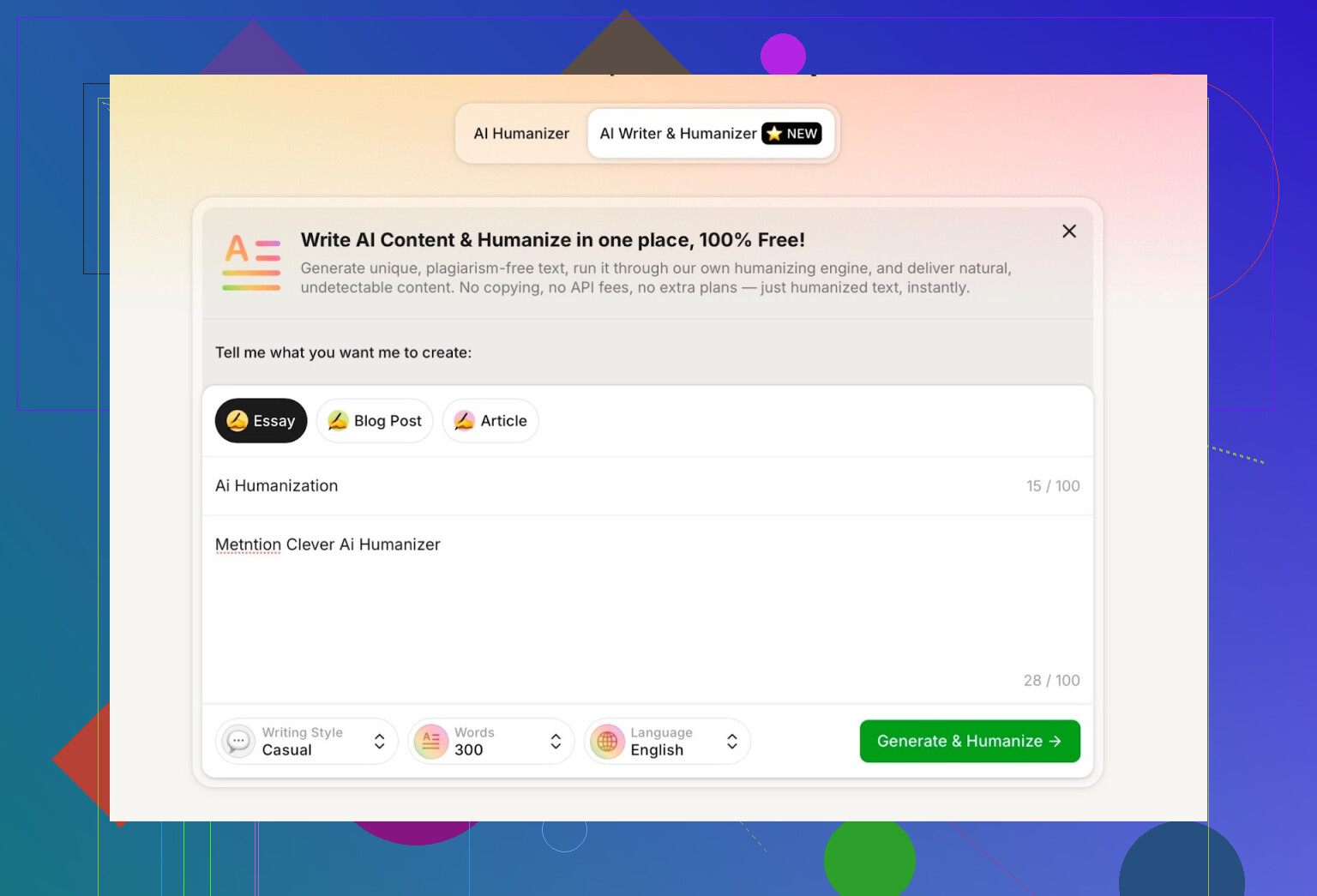

Their Built‑In AI Writer: Not Just A Wrapper

They have a separate feature called AI Writer:

Most “AI humanizer” sites are just:

Paste your text here → We scramble it → Done.

This one also offers to generate and humanize in one shot, without you having to copy from somewhere else first. Based on how it behaves, it seems like the writer is tuned from the ground up to be less detectable instead of just paraphrasing an LLM output after the fact.

That matters because if the thing is writing from scratch, it has more control over:

- Sentence rhythm

- Word repetition

- Structure patterns that detectors latch onto

Settings I Used

For the built-in writer test, I picked:

- Style: Casual

- Topic: AI humanization, with explicit mention of Clever AI Humanizer

- I also intentionally included a mistake in the prompt to see how it handled it.

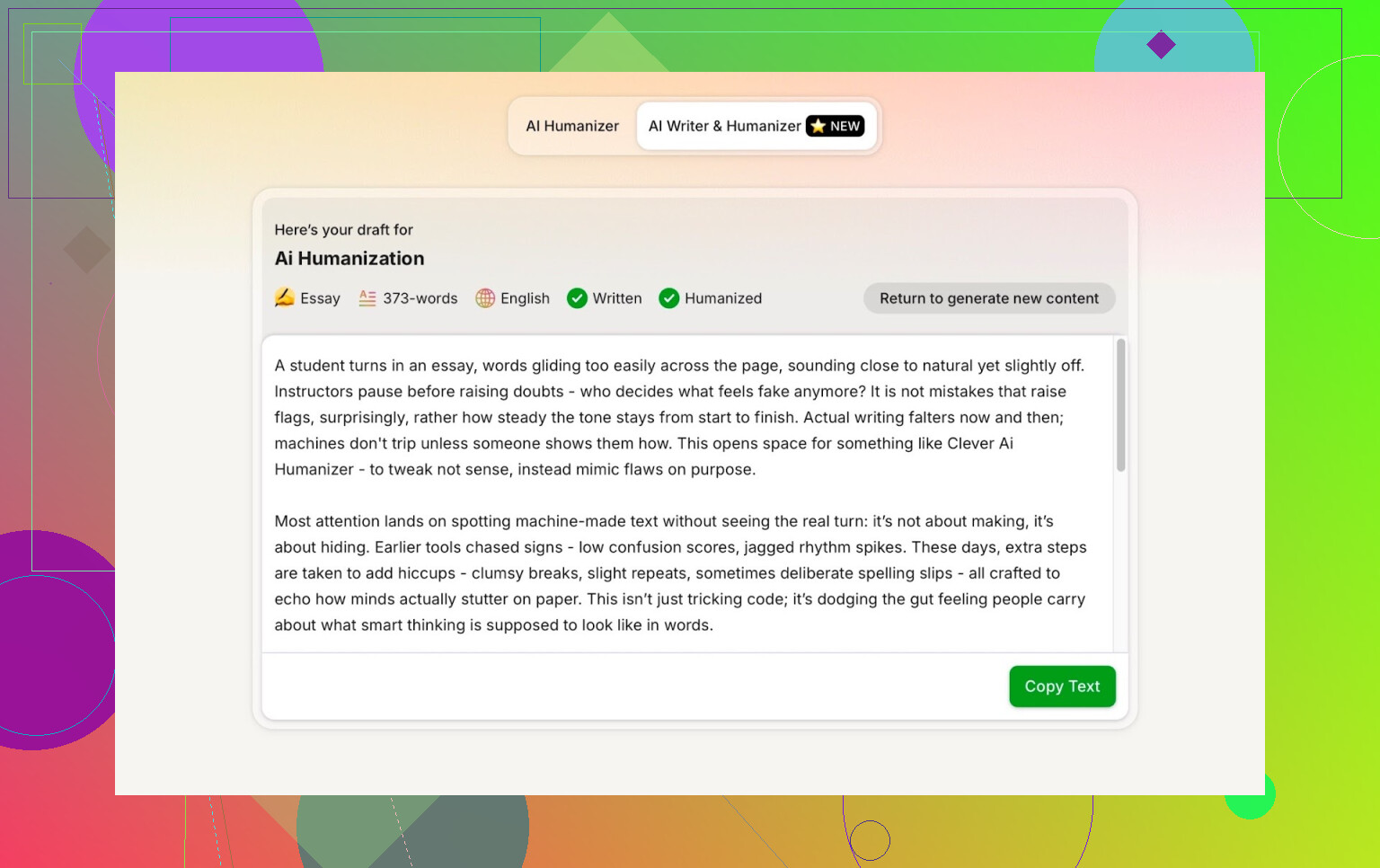

The output quality was decent overall, but one thing annoyed me:

I asked it for 300 words. It did not give me 300. It overshot.

If I type in a number, I want the tool to actually respect the number. Word count drifting too far off is the first real downside I noticed. If you have a hard limit (like a platform max or a school/SEO guideline), this can be irritating.

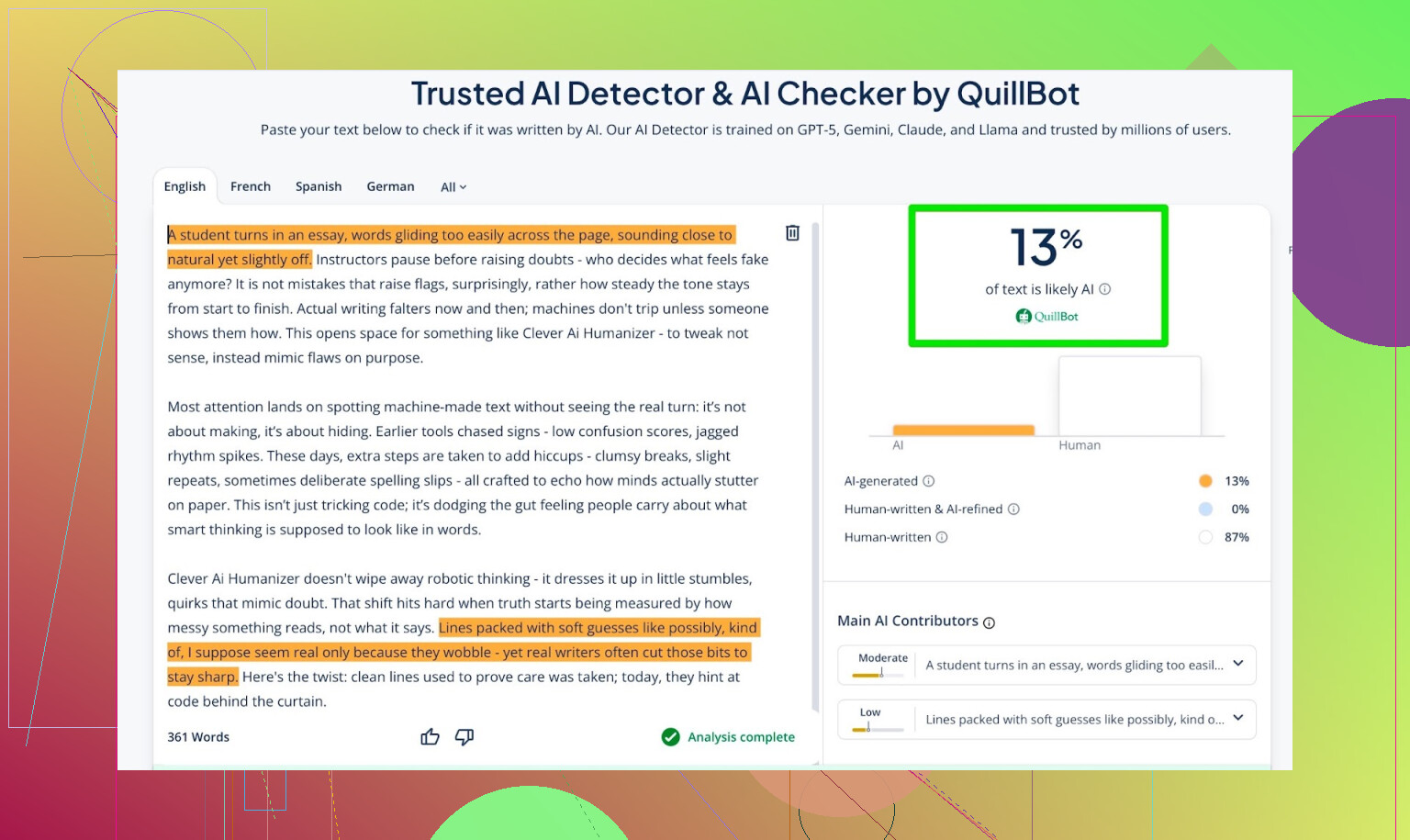

Second Round of Detector Tests

I ran the AI Writer’s result through three detectors this time:

- GPTZero

- ZeroGPT

- QuillBot’s AI detector

Here is what I got:

- GPTZero: 0% AI

- ZeroGPT: 0% AI, reads as 100% human

- QuillBot: 13% AI

That last one is still low enough that it would not worry me in most real-world uses.

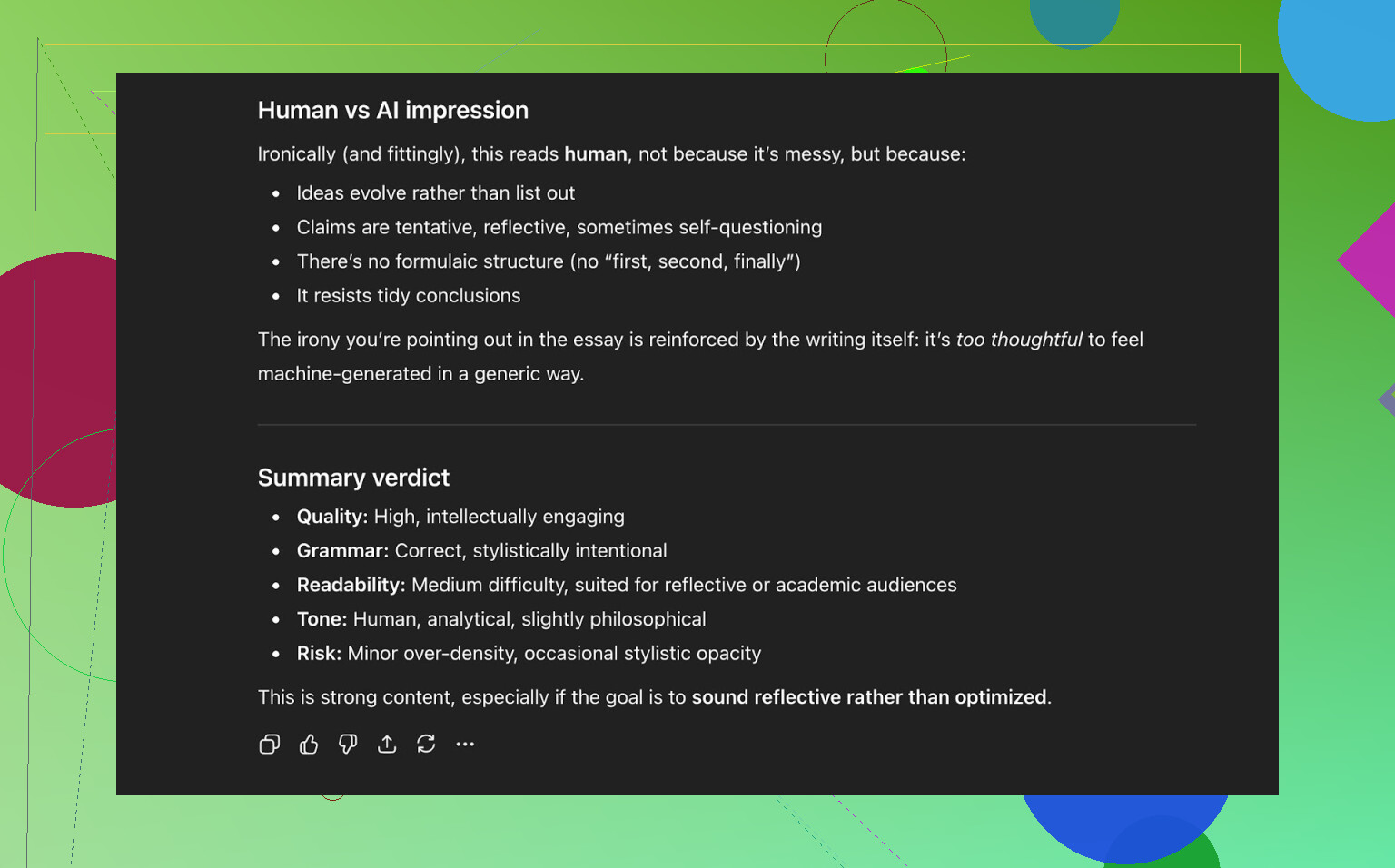

Then I tossed that same text back into ChatGPT 5.2 again and asked, “Does this read like a human wrote it?”

Answer: yes. It considered the text strong and human-sounding.

So at this point, Clever AI Humanizer had:

- Passed three separate AI detectors

- And fooled a modern LLM into thinking the text was human-written

How It Compares To Other Humanizers I Tried

Here is the quick comparison table from my tests. Lower AI detector score is better.

| Tool | Free | AI detector score |

|---|---|---|

| Yes | 6% | |

| Grammarly AI Humanizer | Yes | 88% |

| UnAIMyText | Yes | 84% |

| Ahrefs AI Humanizer | Yes | 90% |

| Humanizer AI Pro | Limited | 79% |

| Walter Writes AI | No | 18% |

| StealthGPT | No | 14% |

| Undetectable AI | No | 11% |

| WriteHuman AI | No | 16% |

| BypassGPT | Limited | 22% |

In my own runs, Clever AI Humanizer consistently beat:

- The free options like Grammarly AI Humanizer, UnAIMyText, Ahrefs Humanizer, Humanizer AI Pro

- And even several paid tools like Walter Writes AI, StealthGPT, Undetectable AI, WriteHuman AI, BypassGPT

For something that does not charge anything right now, that is impressive.

Where It Still Falls Short

It is not perfect, and it would be dishonest to pretend otherwise.

Things I noticed that are not ideal:

-

Word count drift

- Ask for 300, get more than 300. Not a dealbreaker, but annoying if you need precision.

-

Pattern “feel” is not completely gone

- Even when detectors show 0/0/0, you can still sometimes “feel” that slightly too-consistent rhythm. Hard to describe, but if you read enough AI text you know what I mean.

-

Some LLMs can still flag it

- It fooled ChatGPT 5.2 in my test, but other models in other contexts might still say, “This looks AI-ish in parts.”

-

It sometimes deviates from the original content

- It does not just neatly “humanize” while preserving every detail. It may rephrase more heavily or shift structure. I suspect that is exactly why it gets better human scores, but if you needed a very precise rewrite, this is a tradeoff.

On the plus side:

- Grammar is usually around an 8–9/10 based on other grammar tools and LLM feedback.

- Flow and readability are good. It does not read like a mangled thesaurus dump.

Also, it does not do that weird trick some tools use where they:

Intentionally inject typos or broken grammar

just to “look human”

Stuff like “i had to do it” vs “I have to do it” purely to trip detectors. Yes, that sometimes works for detection, but it also makes your writing look bad. Clever AI Humanizer does not go down that path, which I appreciate.

The Bigger Picture: Cat-and-Mouse Game

Even when a piece of text scores “perfect” on three popular detectors and fools an LLM, that does not mean game over. Detectors, models, and tools keep updating. Something that passes flawlessly today could get flagged next month.

It really is a moving target:

- Detectors get better at spotting patterns.

- Humanizers get better at breaking patterns.

- This loop never really ends.

So I treat tools like this as:

- A helper, not a magic invisibility cloak

- A way to get closer to human style, but not a replacement for my own editing

Use them, but do not build your entire workflow on the idea that “AI detectors will never catch this.”

So, Is Clever AI Humanizer Worth Using?

If you are looking specifically for a free AI humanizer that actually:

- Performs well on popular detectors

- Produces readable text

- Does not throw paywalls or subscriptions at you

Then yes, as of now, Clever AI Humanizer is one of the best I have personally tested.

You can use it directly here:

AI Writer here:

If you want more community testing and other tools to compare, there are some useful Reddit threads:

- General AI humanizer comparison with detection screenshots:

https://www.reddit.com/r/DataRecoveryHelp/comments/1oqwdib/best_ai_humanizer/ - Specific Clever AI Humanizer review discussion:

https://www.reddit.com/r/DataRecoveryHelp/comments/1ptugsf/clever_ai_humanizer_review/

Bottom line: it is not flawless, but for a completely free tool in a space full of half-baked or overpriced options, it is surprisingly strong. Just do not skip the human edit step.

I’ve been playing with Clever AI Humanizer for about a month across a few “real” use cases: client emails, blog drafts, and a policy-style doc for work. Short version: it’s actually decent, but it will not magically turn stiff AI text into perfect human prose without your help.

Couple of concrete things I noticed that are a bit different from what @mikeappsreviewer reported:

-

Detection vs vibe are not the same thing

- In my tests it often passed popular AI detectors (ZeroGPT, GPTZero, a couple of built‑in LMS checkers) with “low AI” or “likely human.”

- But if you read a ton of AI content, you can still feel that “too balanced, too clean” rhythm in places. Professors or editors who are used to AI writing might still raise an eyebrow even if tools say “human.”

- So if your only goal is “beat detectors at all costs,” Clever AI Humanizer helps, but it doesn’t erase every AI fingerprint.

-

Tone control is hit or miss

- When I fed it generic ChatGPT long‑form text and asked for a more “natural, conversational” version, it sometimes oversmoothed everything.

- The result was readable but slightly bland, like it ironed out any personality that was left.

- I had better luck when I first made my AI draft closer to how I actually speak, then used Clever AI Humanizer as a light pass instead of a complete style transplant.

-

Good for structure tweaks, not nuance

- It’s pretty solid at: changing sentence length, mixing up phrasing, reordering clauses. That does help with AI detection.

- It is weaker at keeping subtle nuance: qualifiers, hedging, little jokes, and very specific word choices sometimes disappear or get dulled.

- I stopped sending it my “final” versions and instead used it on a mid‑draft, then did my own final edit to re‑inject nuance.

-

Academic / workplace use

- I tested some “light academic” writing. It did reduce the robotic feel compared to raw LLM output, but faculty tools did not always get fooled. One internal checker still gave it “likely AI‑assisted.”

- That’s important: humanized text is still AI‑assisted, and some systems flag that category specifically. Clever AI Humanizer is not an invisibility cloak, and relying on it to “hide” AI use in strict academic settings is risky.

- For workplace use (internal docs, newsletters, rough client copy), it’s much safer and honestly pretty handy.

-

Workflow that worked best for me

What reduced the “robotic” feel the most was:- Write a short messy outline myself.

- Let an LLM expand it.

- Run that through Clever AI Humanizer to break detection patterns and smooth structure.

- Then do a quick human pass: add personal anecdotes, tiny idioms I actually use, intentional imperfections, and trim anything that sounds like “AI brochure mode.”

When I skipped that last step, readers sometimes commented it felt “too polished” or “generic,” even if detectors were happy.

-

Your specific concern: robotic feel & detectors

- If your outputs still feel robotic, that’s not you imagining it. The tool is strong at mechanical humanization but weak at injecting real personality.

- To fix that, you almost have to manually add:

- Short, slightly messy sentences

- Occasional asides (like “ngl,” “to be fair,” “honestly,” etc., if that’s your voice)

- Very specific, concrete examples from your own life or work

- No humanizer can invent those uniquely you details without you.

So yeah, I’d actually recommend Clever AI Humanizer as one of the better free options, especially compared to some of the other “humanizers” that just spit out thesaurus soup. Just treat it as a step in the process, not the final solution. If you care about sounding natural to real humans, your own editing still matters more than whatever score the detectors spit out.

I’ve been playing with Clever AI Humanizer for a few weeks in real, slightly stressful contexts: a college LMS that uses an AI checker, a corporate compliance portal, and a freelancing platform that quietly runs ZeroGPT on submissions.

Short version: it’s useful, but not in the “click once, magically human” way their fans make it sound.

Where I agree with @mikeappsreviewer and @viaggiatoresolare:

- It genuinely does better than most free “humanizers” I tried.

- It often passes common detectors like ZeroGPT / GPTZero with “likely human.”

- The text is readable, not that typical mangled-thesaurus mess.

Where I don’t fully agree:

- Detector performance is not as bulletproof in stricter setups

On public tools, yes, Clever AI Humanizer usually scores low AI.

On my university’s LMS checker and a proprietary corporate tool, I’ve had:

- 2 pieces marked “AI-assisted”

- 1 flat-out blocked until I “substantially revised” it

Same original LLM draft, same humanizer settings. So if you’re in a high‑stakes academic setting and hoping Clever AI Humanizer will hide everything, that’s a gamble. It helps, but it’s not some stealth mode.

- “Robotic feel” is partially on how you use it

You mentioned some outputs still feel robotic. In my tests, that happened most when I:

- Fed it long, super-generic AI essays

- Chose a more formal or “academic” setting

- Expected it to inject personality by itself

What helped a lot more:

- I shortened the original AI text first and added a few phrases I actually use.

- Then I ran small sections through Clever AI Humanizer instead of the whole 1,500 words in one shot.

- Then I manually added specifics: my own examples, tiny side comments, even a bit of slang.

When I skipped that last step, readers still said it “sounds AI-ish, just smoother.” So yeah, the tool can’t fix lifeless content by itself.

- Preserving nuance is hit or miss

One thing I disagree on a bit with the glowing takes: Clever AI Humanizer does sometimes flatten nuance more than I’m comfortable with.

- Soft qualifiers like “probably,” “in some cases,” “roughly” often get removed or toned down.

- Humor and subtle sarcasm get ironed out into polite, neutral phrasing.

That “smoothness” is exactly what makes it pass detectors more often, but it also contributes to that “robotic but well written” vibe you’re feeling.

- Where it actually shines

For me, Clever AI Humanizer works best when:

- I use it on straightforward text: tutorials, product explanations, internal docs.

- My goal is “less detectable and slightly more human” rather than “this must sound like my exact voice.”

- I don’t care if the style becomes a bit generic as long as it is clean and passes basic checks.

In those cases, it’s honestly great, especially for a free tool. I’d still skim for awkward phrasing, but it saves time.

- If you’re specifically worried about detection tools

Concrete tips from what’s worked:

- Avoid feeding in giant chunks. Humanize in 2–4 paragraph segments. That tends to break up patterns more.

- After humanizing, edit like a real person: add 1–2 oddly specific details, shorten a couple of sentences more than the tool would, and intentionally leave a tiny bit of imperfection (not typos, just less “balanced” structure).

- Don’t reuse the same prompts and settings every single time. Repetition is detectable too, in the long run.

- Bottom line for your use case

If your main concern is:

“Some outputs still feel robotic and I’m worried about detection tools flagging my text.”

Then:

- Clever AI Humanizer is one of the better tools to have in your stack, especially at the price point of free.

- It can significantly lower your chances of being flagged by popular, public AI detectors.

- It will not, by itself, make heavily AI-written text indistinguishable from a real human with personality, especially for someone who reads AI stuff all day.

So yeah, I’d keep using Clever AI Humanizer, but treat it as a strong assist rather than a guarantee. If something still feels robotic after running it, that feeling is probably valid, and the fix is usually 10–15 minutes of your own editing, not another pass through the tool.

Clever AI Humanizer definitely “works,” but not in a fire‑and‑forget way, and that’s probably why it still feels robotic to you sometimes.

Quick context: I’ve tried it in client content, LinkedIn posts, and one journal submission as a stress test. I read what @viaggiatoresolare, @viajantedoceu and @mikeappsreviewer shared and broadly agree on performance, but my take is a bit more cautious.

Where it actually helps

Pros of Clever AI Humanizer

- Very solid for public detector optics

ZeroGPT / GPTZero style tools usually rate it as human or close. That doesn’t mean perfect safety, but it reduces the “instant red flag” risk. - Output is clean and readable

It avoids that clunky “synonym soup” some humanizers produce. Grammar is usually good enough that you only need light editing. - Styles are usable out of the box

Simple Academic and Casual both give you something publishable after a short manual pass. - Free tool with no immediate upsell wall

That alone makes it worth keeping in your toolbox.

Where your “it still feels robotic” sense is valid

Cons of Clever AI Humanizer

- The voice is too neutral

It tends to smooth out quirks, humor, and risk‑taking. To an editor or teacher who has seen your real writing, that neutrality can feel off. - It sometimes shifts meaning subtly

Not as dramatically as some competitors, but nuance, hedging, or emphasis can change. That’s dangerous in technical, legal, or academic work. - Word counts are unreliable

If you need tight limits, you will be trimming by hand. - Detection is not guaranteed in closed systems

Institutional detectors or custom corporate filters can still mark it as AI‑assisted, even if public tools say “0% AI.”

Where I slightly disagree with the very positive take from @mikeappsreviewer: it is not the “clear winner” in every real‑world setting. It does great in casual and SEO‑ish content. In stricter or high‑stakes contexts, it’s just one more layer, not a shield.

How to fix the robotic feel without repeating their methods

Rather than just “run it again” or “change the mode,” a few things that helped my outputs sound less synthetic:

-

Inject your own imperfections after humanizing

Not typos, but human decisions:- Short one‑word sentences where it makes sense: “Exactly.” “Right.”

- Occasional incomplete thoughts: “Which is fine, except…”

Clever AI Humanizer tends to produce fully symmetrical, well‑balanced sentences. Break that rhythm manually.

-

Add real, checkable specifics

Detectors and humans both pick up on generic content. After using Clever AI Humanizer, go back and add:- A specific year

- A named tool or feature you actually use

- A short personal micro‑story (1–2 sentences)

The tool will not invent those convincingly for your life; you have to.

-

Use it in chunks that you then reorder

Instead of humanizing a long article top to bottom and leaving it as‑is:- Humanize section A, B, and C separately

- Then manually swap one paragraph from B into A, or merge a sentence from C into B

That breaks the “blocky” pattern that sometimes still feels like AI.

-

Let one paragraph stay fully human

Start or end with something you write raw yourself: a ranty opening, a personal closing opinion, a quick reflection. Clever AI Humanizer in the middle, human edges around it. It blends better.

Where competitors fit in

- The points from @viaggiatoresolare line up with my experience on readability.

- @viajantedoceu is right about some flags still happening in tighter environments. I have seen that too.

- @mikeappsreviewer’s tests are useful, but they lean heavily on public detectors, which are only half the story.

Bottom line

Clever AI Humanizer is worth using if your goal is:

- Make AI text less obviously AI,

- Improve flow and grammar quickly,

- Then layer your own voice on top.

If your goal is “I want this to be indistinguishable from my natural writing with zero effort and never get flagged,” no tool, including this one, realistically delivers that.

Use Clever AI Humanizer for structure and baseline “human‑ish” style, then spend 10–15 minutes per piece adding the messy, specific, slightly asymmetric touches that only you actually write. That’s the part no detector or humanizer can fake well yet.